Employing Integer Addition as an Approximation Method for Float Multiplication

In recent times, the realm of floating point calculations has expanded beyond scientific and business computing, transcending into the world of video games, large language models, and more. And it's no secret that a processor lacking floating point capabilities is virtually useless in today's tech-driven landscape.

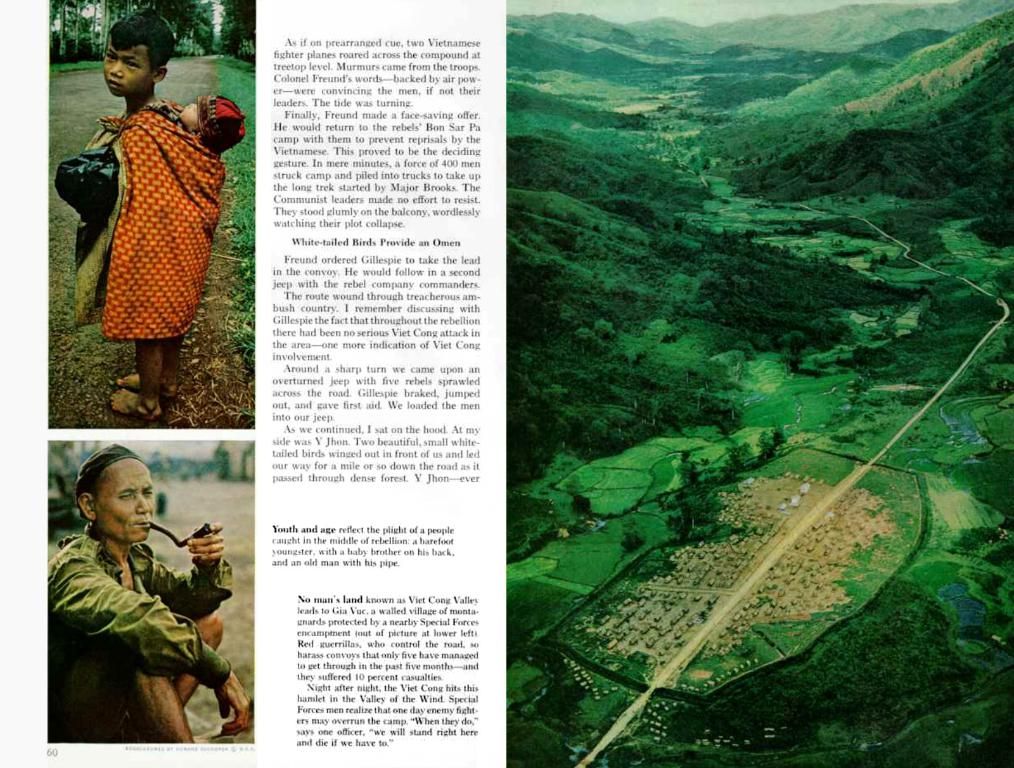

However, integer-based approximations can mimic floating point operations, as demonstrated by Malte Skarupke. This approach, based on integer addition, was first proposed by Hongyin Luo and Wei Sun. The idea is straightforward: sum the floating point inputs as integers and then adjust the exponent to arrive at an answer close to the actual result.

But, as the article suggests, there's a hidden world of issues and edge cases to be aware of. From under- and overflow, to specific floating point inputs, it's a complex dance that demands careful attention.

In contrast to scientific calculations where even minor inaccuracies can create significant errors later on, graphics and large language models are less demanding when it comes to float point precision. The ~7.5% accuracy offered by the integer approach is more than adequate in these cases.

The question remains, though: is the integer method truly more efficient? Is it a game-changer, as the paper suggests, or simply a fallback like in the case of integer-only audio decoders for platforms devoid of an FPU?

Modern FP-focused vector processors like GPUs and their derivatives are capable of processing large volumes of data efficiently. The prospect of shifting this to the ALU of a CPU for energy savings appears somewhat optimistic.

When it comes to integer-based versus FPUs, it's all about finding the sweet spot, depending on the specific application. In situations where energy efficiency is critical, integer operations will prevail due to their memory and energy efficiency. However, for applications demanding high precision and dynamic range, like graphics or large language models, FPUs are the preferred choice.

So, while integer approximations have their place, it's clear that FPUs are the preferred option for applications requiring precise and accurate results, particularly in the realm of graphics and large language models. However, new formats like FP8 offer an intriguing balance between efficiency and precision, making them attractive alternatives in certain scenarios.

Insights:The efficiency of using integer-based approximations versus floating-point units (FPUs) depends on the specific application, particularly in graphics and large language models. In graphics applications, FPUs are typically preferred due to their ability to handle complex mathematical operations, while in language models, the use of FPUs for critical components is often preferred for their wide range of numerical capabilities. In situations where energy efficiency is prioritized, integer operations may offer advantages due to their memory and energy efficiency compared to FPUs. However, newer formats like FP8 aim to balance efficiency with precision, making them attractive alternatives in certain scenarios.

Data-and-cloud-computing advancements have integrated floating point calculations into various fields, such as video games and large language models. This development is largely facilitated by technology, including modern FP-focused vector processors like GPUs.

In the case of graphics and large language models, floating-point units (FPUs) are generally preferred over integer-based approaches due to their precision and dynamic range capabilities. However, new formats like FP8 offer an intriguing balance between efficiency and precision, making them attractive alternatives in certain scenarios.