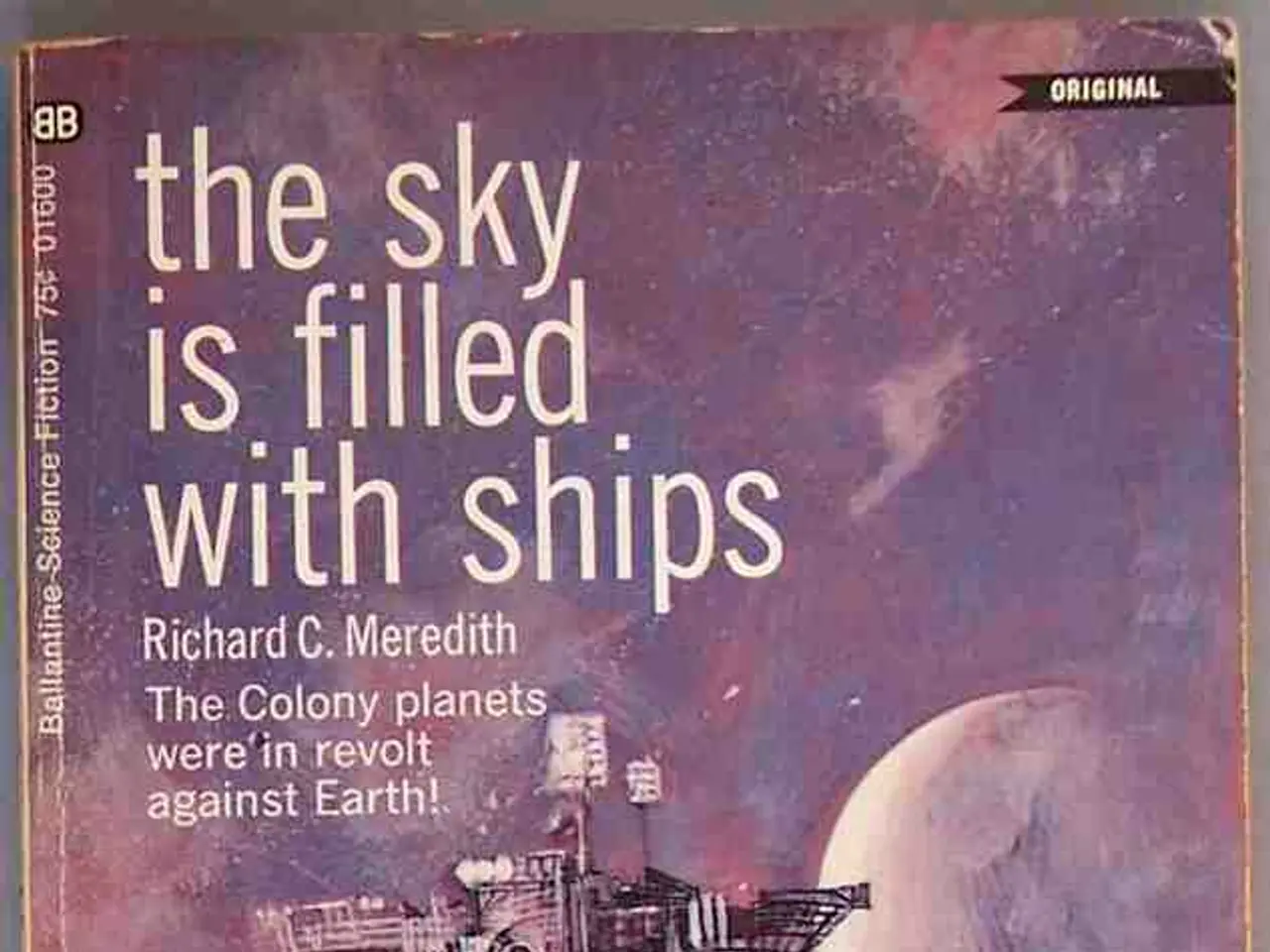

Bracing for an Artificial Intelligence Catastrophe carries as much absurdity as readying oneself for an Extraterrestrial Attack.

In the realm of technology policy, a careful balance between progress and caution is often necessary. This is particularly true in the rapidly evolving field of Artificial Intelligence (AI), where the potential benefits for society are immense, but so are the concerns about potential risks.

Recent discussions on AI policy, such as those outlined in the June 2025 California Report on Frontier AI Policy, emphasize the importance of grounding policymaking in empirical research and sound policy analysis. The report stresses the need for evidence-based policymaking, incorporating observed harms, predictions, modeling, and historical experience to create flexible, robust frameworks shaping AI’s development and societal impact.

This approach is not new. Historically, a similar balance can be seen in the early development of the internet, where initial underestimation or focus on productivity and innovation over alarmist reactions to threats sometimes led to costly security breaches. The lesson learned from this episode is that ignoring or downplaying early warnings can lead to entrenched vulnerabilities, highlighting the importance of balanced, evidence-informed policymaking.

Similarly, when it comes to the potential existence of intelligent extraterrestrial life, speculative alarmism has generally been met with skepticism. Scientific and political communities have tended to focus on pragmatic and empirical approaches, developing protocols for scientific investigation and international cooperation rather than sensationalist fear.

Famed physicist Stephen Hawking warned that making contact with intelligent alien life could spell the end for humanity. However, nations haven't stopped exploring space because of far-flung, speculative claims that aliens might annihilate humanity. Instead, they continue to focus on developing and deploying AI systems that could benefit society, such as improving health care, helping children learn, and making transportation safer.

Policymakers are not restricting companies from developing more advanced spacecraft due to concerns about unintentional contact with a hostile alien species. Similarly, they are not prioritising Earth's extraterrestrial defences at the same level as its pandemic preparedness because of the increased number of unidentified aircraft sightings.

The fear around AI is likely to continue, and policymakers should remain clear-eyed amid AI hyperbolizing to focus on important policy work to ensure robust AI innovation and deployment. The approach exemplified today by California’s AI policy efforts—and mirrored in some historical technology governance moments—is to reject alarmist narratives about novel threats without evidence, favoring instead measured, research-backed policy frameworks designed to optimize benefits while managing risks. This balance reflects lessons learned from past technologies, where alarmism either derailed productive development or, conversely, where ignoring risks caused later harm.

[1] California Report on Frontier AI Policy, June 2025.

- The June 2025 California Report on Frontier AI Policy emphasizes the significance of basing policymaking on empirical research and sound policy analysis, advocating for evidence-based policymaking to create flexible, robust frameworks for AI's development and societal impact.

- Historically, a similar balance between progress and caution can be observed in the early development of technology, such as the internet, where initial underestimation of threats sometimes led to costly security breaches, underscoring the importance of evidence-informed policymaking.

- In the realm of AI policy, alarmist narratives without evidence should be rejected, and a measured approach that favors research-backed policy frameworks designed to optimize benefits while managing risks should be adopted, as exemplified by California’s AI policy efforts.

- The fear around AI is likely to persist, and policymakers should remain clear-eyed amid AI hyperbolizing to focus on important policy work that ensures robust AI innovation and deployment, drawing from lessons learned from past technologies.